쿠키 설정

데이터가 정확하다고 98% 확신하시나요?

When working with machine learning, neural networks can be presumptuous when judging their own accuracy, but what is the real story behind AI confidence scores? There are some parallels between network confidence and reality, but how can you trust that your network is correct?

Machine learning is a great way to analyze vast amounts of visual data from images, film, or real-time video footage to identify people or meaningful objects within a scene. This data can then be manipulated for use in smart applications, such as facial identification for security systems, human attentiveness in driver monitoring systems, or identification of defective objects on a factory conveyor belt, to name just a few. When visualizing the result of classifiers or object detectors, there are often percentages shown next to the identified item called “the confidence score”. Below is an example of an object detector in a city environment with the confidence score shown above each detected object:

But what does a confidence score actually mean, and what are its uses? Popular consensus describes a confidence score as a number between 0 and 1 that represents the likelihood that the output of a Machine Learning model is correct and will satisfy a user’s request.

At first glance, this sounds excellent! It seems that the network can somehow itself determine the likelihood of it being correct. However, upon closer inspection, this is clearly not the case, as is shown further below.

A Network Cannot Judge the Correctness of its Own Output

If a network could judge the correctness of its own output, it would mean it already knows the answer. The answer would always be either 0% or 100%, never anything in between. But looking closer at a section of our city image, we see the following:

Here, the network has given the person riding a scooter a confidence score of 81.2%.

For a human, it is clear that the picture shows a person on a scooter, but for some reason, the network is only 81.2% sure that this is the case. But what does 81.2% actually mean? Does it mean the network is expecting to be wrong in 18.8% of the cases of humans on scooters? Clearly it should be 100% confidence as it is indeed a picture of a person on a scooter. Does it mean we are only seeing 81.2% of a human? What's going on?

The short answer is that confidence is not probability.

Instead, the confidence output of a network can be seen as a relative confidence, not an absolute metric. In other words, the network feels more confident in its 98% level confidence identification than it does with an 81% identification, but there should be no absolute interpretation of 98% or 81%. This is also why a confidence score oftentimes comes with a threshold that would represent the boundary between true or false.

One way to drive home the relative rather than absolute interpretation is the understanding that we can with easy scale the absolute confidence, as shown below.

Giving Data a Confidence Boost

The confidence output metric is often based on a large number of computations. However, there is a trivial way in which an engineer can increase (or decrease) the confidence level as they please.

In our city image with the scooter, the network was only 81.2% confident. This is represented by the value 0.812, but we can easily modify the network to simply compute the square root of the confidence before outputting the answer, in effect making the network computation one step longer. Doing this, instead of 0.81, we now get a value of √0.812 = 0.9, or 90%. We just increased the confidence output of the network! Performing the same square root operation again gives us 95% confidence, and yet another time gives us 97% confidence. Not bad for some trivial math operations. Again, we reach the conclusion that there is no valid interpretation of absolute confidence values.

It is clear that we can easily modify the absolute confidence value to be more or less. This drives home the point that absolute confidence scores are in some sense meaningless as they can be manipulated to show any value.

Hand-to-hand Combat with a Grizzly Bear

In a recent poll, YouGov asked Americans and Britons how confident they were that they could beat various animals in unarmed combat. What has this got to do with neural network confidence you ask? Let's find out.

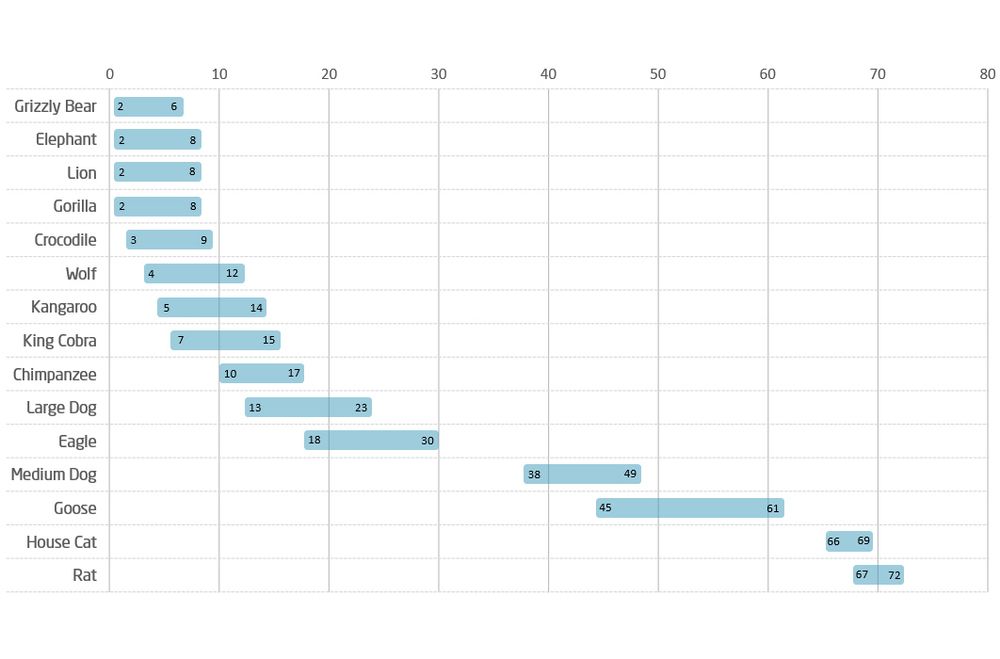

The results of how people from the US and Britain believe (or self-assess) their chances in hand-to-hand combat with various animals can be seen in the figure below.

Source; Americans are more confident than Britons that they could beat any animal in a fight, YouGov UK

There are several analogies between the self-assessment of people fighting grizzly bears with their hands and the confidence measure of neural networks.

First, it’s a self-assessment. Just like with a network’s confidence score, individuals are asked to estimate the confidence of a situation that they have probably never experienced; the network might not have seen a person on a scooter, and most American males have never fought a grizzly bear.

Second, how should we interpret the numbers? Just like the difficulty in knowing what to do with an 81% confidence in assessing that a person on a scooter is a person, what does it really mean if 6% of US males think they can beat a grizzly bear in hand-to-hand combat in practice? Does it mean that if we ask 100 Americans to fight a grizzly bear that 94% will run and 6% will fight – and win? Again, the interpretation of human confidence for specific cases is unclear.

And finally, like the confidence measure of a neural network, there is information in the relative self-assessment. For instance, one could draw the conclusion that it is easier for Americans to win a fight over a goose (61%) compared to a lion (8%), so even if the absolute self-assessed confidence doesn't give us much value, given the choice, people should opt to fight a goose rather than a lion.

How Confident Are You?

At the end of the day, a confidence value by itself is a meaningless number, especially with respect to absolute values. Relative confidence values can give some hint about where the network assess its capabilities to be better, but without a thorough analysis of the performance of the neural network against real-world data, even relative confidence measures can be very misleading and easily lead you to misplace your confidence.

So how do you give proper meaning to the numbers? The only way to attain definitive accuracy in neural network outputs is to provide large amounts of structured input where annotations are accurate and consistent. The better you know your data, the better you can understand your confidence score. At Neonode, we achieve this through computer generated visual input, known as synthetic data. Not only is this a more accurate way to train neural networks, but it is also much faster than gathering real-world data and it can be used to test the robustness and generalization of machine learning models as synthetic data is not subject to the same biases and confounding factors as real data gathered from real life photos or film.

Trust in Neonode

Confidence is but one of many parameters that goes into determining the validity of a system's output. At Neonode, all our networks are produced by us and trained with extensive synthetic data that is also produced by us. With full control over the end-to-end process, we have designed an innovative methodology that generates similarity scores of an input image, which gives us far more accuracy than relying on confidence scores alone.

In addition, Neonode's mission critical functionality is built upon the idea of graceful degradation, meaning that when our networks can't assess an image properly, there is a more general and robust, less accurate network or process that takes over, while signaling to the broader system that the signal integrity is lowered.

Neonode does the heavy lifting when it comes to fully understanding our networks and interpreting the neural network outputs. We have a deep understanding of when our networks produce valid results, and more importantly, when they don't. This way, our customers can focus on using our information and outputs to define end user experiences rather than trying to interpret the validity of outputs.

Confidence without context is meaningless.